Note

Click here to download the full example code

Lasso with held-out test set¶

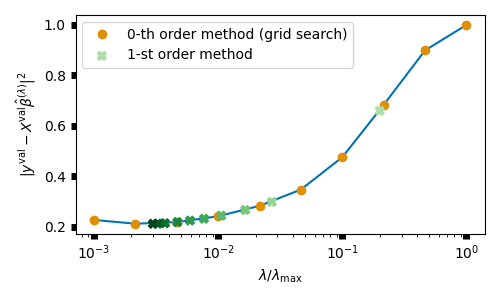

This example shows how to perform hyperparameter optimization for a Lasso using a held-out validation set.

# Authors: Quentin Bertrand <quentin.bertrand@inria.fr>

# Quentin Klopfenstein <quentin.klopfenstein@u-bourgogne.fr>

# Mathurin Massias

#

# License: BSD (3-clause)

import time

import celer

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

from celer.datasets import make_correlated_data

from libsvmdata.datasets import fetch_libsvm

from sparse_ho.models import Lasso

from sparse_ho.criterion import HeldOutMSE

from sparse_ho import ImplicitForward

from sparse_ho.utils import Monitor

from sparse_ho.utils_plot import discrete_cmap

from sparse_ho.ho import grad_search

from sparse_ho.grid_search import grid_search

from sparse_ho.optimizers import LineSearch

print(__doc__)

dataset = 'rcv1'

# dataset = 'simu'

if dataset == 'rcv1':

X, y = fetch_libsvm('rcv1.binary')

else:

X, y, _ = make_correlated_data(n_samples=1000, n_features=2000,

random_state=0)

n_samples = X.shape[0]

idx_train = np.arange(0, n_samples // 2)

idx_val = np.arange(n_samples // 2, n_samples)

print("Starting path computation...")

n_samples = len(y[idx_train])

alpha_max = np.max(np.abs(X[idx_train, :].T.dot(y[idx_train])))

alpha_max /= len(idx_train)

alpha0 = alpha_max / 5

n_alphas = 10

alphas = np.geomspace(alpha_max, alpha_max/1_000, n_alphas)

tol = 1e-7

Out:

/home/circleci/miniconda3/lib/python3.9/site-packages/seaborn/cm.py:1582: UserWarning: Trying to register the cmap 'rocket' which already exists.

mpl_cm.register_cmap(_name, _cmap)

/home/circleci/miniconda3/lib/python3.9/site-packages/seaborn/cm.py:1583: UserWarning: Trying to register the cmap 'rocket_r' which already exists.

mpl_cm.register_cmap(_name + "_r", _cmap_r)

/home/circleci/miniconda3/lib/python3.9/site-packages/seaborn/cm.py:1582: UserWarning: Trying to register the cmap 'mako' which already exists.

mpl_cm.register_cmap(_name, _cmap)

/home/circleci/miniconda3/lib/python3.9/site-packages/seaborn/cm.py:1583: UserWarning: Trying to register the cmap 'mako_r' which already exists.

mpl_cm.register_cmap(_name + "_r", _cmap_r)

/home/circleci/miniconda3/lib/python3.9/site-packages/seaborn/cm.py:1582: UserWarning: Trying to register the cmap 'icefire' which already exists.

mpl_cm.register_cmap(_name, _cmap)

/home/circleci/miniconda3/lib/python3.9/site-packages/seaborn/cm.py:1583: UserWarning: Trying to register the cmap 'icefire_r' which already exists.

mpl_cm.register_cmap(_name + "_r", _cmap_r)

/home/circleci/miniconda3/lib/python3.9/site-packages/seaborn/cm.py:1582: UserWarning: Trying to register the cmap 'vlag' which already exists.

mpl_cm.register_cmap(_name, _cmap)

/home/circleci/miniconda3/lib/python3.9/site-packages/seaborn/cm.py:1583: UserWarning: Trying to register the cmap 'vlag_r' which already exists.

mpl_cm.register_cmap(_name + "_r", _cmap_r)

/home/circleci/miniconda3/lib/python3.9/site-packages/seaborn/cm.py:1582: UserWarning: Trying to register the cmap 'flare' which already exists.

mpl_cm.register_cmap(_name, _cmap)

/home/circleci/miniconda3/lib/python3.9/site-packages/seaborn/cm.py:1583: UserWarning: Trying to register the cmap 'flare_r' which already exists.

mpl_cm.register_cmap(_name + "_r", _cmap_r)

/home/circleci/miniconda3/lib/python3.9/site-packages/seaborn/cm.py:1582: UserWarning: Trying to register the cmap 'crest' which already exists.

mpl_cm.register_cmap(_name, _cmap)

/home/circleci/miniconda3/lib/python3.9/site-packages/seaborn/cm.py:1583: UserWarning: Trying to register the cmap 'crest_r' which already exists.

mpl_cm.register_cmap(_name + "_r", _cmap_r)

Dataset: rcv1.binary

Downloading data from https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/binary/rcv1_train.binary.bz2 (13.1 MB)

file_sizes: 0%| | 0.00/13.7M [00:00<?, ?B/s]

file_sizes: 0%| | 24.6k/13.7M [00:00<02:29, 91.7kB/s]

file_sizes: 0%| | 49.2k/13.7M [00:00<02:29, 91.4kB/s]

file_sizes: 1%|2 | 106k/13.7M [00:00<01:32, 147kB/s]

file_sizes: 2%|4 | 221k/13.7M [00:01<00:52, 257kB/s]

file_sizes: 3%|9 | 451k/13.7M [00:01<00:28, 471kB/s]

file_sizes: 7%|#8 | 909k/13.7M [00:01<00:14, 889kB/s]

file_sizes: 13%|###4 | 1.83M/13.7M [00:01<00:06, 1.71MB/s]

file_sizes: 27%|######9 | 3.66M/13.7M [00:02<00:03, 3.32MB/s]

file_sizes: 38%|#########9 | 5.23M/13.7M [00:02<00:02, 4.10MB/s]

file_sizes: 50%|############8 | 6.81M/13.7M [00:02<00:01, 4.63MB/s]

file_sizes: 61%|###############8 | 8.38M/13.7M [00:02<00:01, 5.00MB/s]

file_sizes: 72%|##################8 | 9.95M/13.7M [00:03<00:00, 5.26MB/s]

file_sizes: 84%|#####################8 | 11.5M/13.7M [00:03<00:00, 5.43MB/s]

file_sizes: 95%|########################8 | 13.1M/13.7M [00:03<00:00, 5.55MB/s]

file_sizes: 100%|##########################| 13.7M/13.7M [00:03<00:00, 3.63MB/s]

Successfully downloaded file to /home/circleci/data/libsvm/binary/rcv1_train.binary.bz2

Decompressing...

Loading svmlight file...

Starting path computation...

Grid search with scikit-learn¶

estimator = celer.Lasso(fit_intercept=False, warm_start=True)

print('Grid search started')

t0 = time.time()

model = Lasso(estimator=estimator)

criterion = HeldOutMSE(idx_train, idx_val)

monitor_grid_sk = Monitor()

grid_search(

criterion, model, X, y, None, None, monitor_grid_sk,

alphas=alphas, tol=tol)

objs = np.array(monitor_grid_sk.objs)

t_sk = time.time() - t0

print('Grid search finished')

Out:

Grid search started

Iteration 1 / 10

Iteration 2 / 10

Iteration 3 / 10

Iteration 4 / 10

Iteration 5 / 10

Iteration 6 / 10

Iteration 7 / 10

Iteration 8 / 10

Iteration 9 / 10

Iteration 10 / 10

Grid search finished

Grad-search with sparse-ho¶

print('sparse-ho started')

t0 = time.time()

model = Lasso(estimator=estimator)

criterion = HeldOutMSE(idx_train, idx_val)

algo = ImplicitForward()

monitor_grad = Monitor()

optimizer = LineSearch(n_outer=10, tol=tol)

grad_search(

algo, criterion, model, optimizer, X, y, alpha0, monitor_grad)

t_grad_search = time.time() - t0

print('sparse-ho finished')

Out:

sparse-ho started

sparse-ho finished

Plot results¶

p_alphas_grad = np.array(monitor_grad.alphas) / alpha_max

objs_grad = np.array(monitor_grad.objs)

print('sparse-ho finished')

print(f"Time for grid search: {t_sk:.2f} s")

print(f"Time for grad search (sparse-ho): {t_grad_search:.2f} s")

print(f'Minimum outer criterion value with grid search: {objs.min():.5f}')

print(f'Minimum outer criterion value with grad search: {objs_grad.min():.5f}')

current_palette = sns.color_palette("colorblind")

cmap = discrete_cmap(len(objs_grad), 'Greens')

fig, ax = plt.subplots(1, 1, figsize=(5, 3))

ax.plot(alphas / alphas[0], objs, color=current_palette[0])

ax.plot(

alphas / alphas[0], objs, 'bo', label='0-th order method (grid search)',

color=current_palette[1])

ax.scatter(

p_alphas_grad, objs_grad, label='1-st order method', marker='X',

color=cmap(np.linspace(0, 1, len(objs_grad))), s=40, zorder=40)

ax.set_xlabel(r"$\lambda / \lambda_{\max}$")

ax.set_ylabel(

r"$\|y^{\rm{val}} - X^{\rm{val}} \hat \beta^{(\lambda)} \|^2$")

plt.tick_params(width=5)

plt.legend()

ax.set_xscale("log")

plt.tight_layout()

plt.show(block=False)

Out:

sparse-ho finished

Time for grid search: 26.69 s

Time for grad search (sparse-ho): 15.32 s

Minimum outer criterion value with grid search: 0.21255

Minimum outer criterion value with grad search: 0.21219

/home/circleci/project/examples/plot_held_out_lasso.py:122: UserWarning: color is redundantly defined by the 'color' keyword argument and the fmt string "bo" (-> color='b'). The keyword argument will take precedence.

ax.plot(

Total running time of the script: ( 0 minutes 55.762 seconds)